As I continue to explore Apple’s newest OS, 'VisionOS', I'm increasingly excited by the potential of both the device and the field of Spatial Design. However, there's an unmistakable sense that Apple’s toolkit may lack context, or more pointedly, contextual awareness. Intuitively I feel that 'Spatial Computing' should be contextually aware.

Contextual awareness is a cornerstone of UX design, underscoring the significance of the user’s application context. This guarantees the UX moulds itself to the user’s social, emotional, and physical surroundings. (Source: Wikipedia)

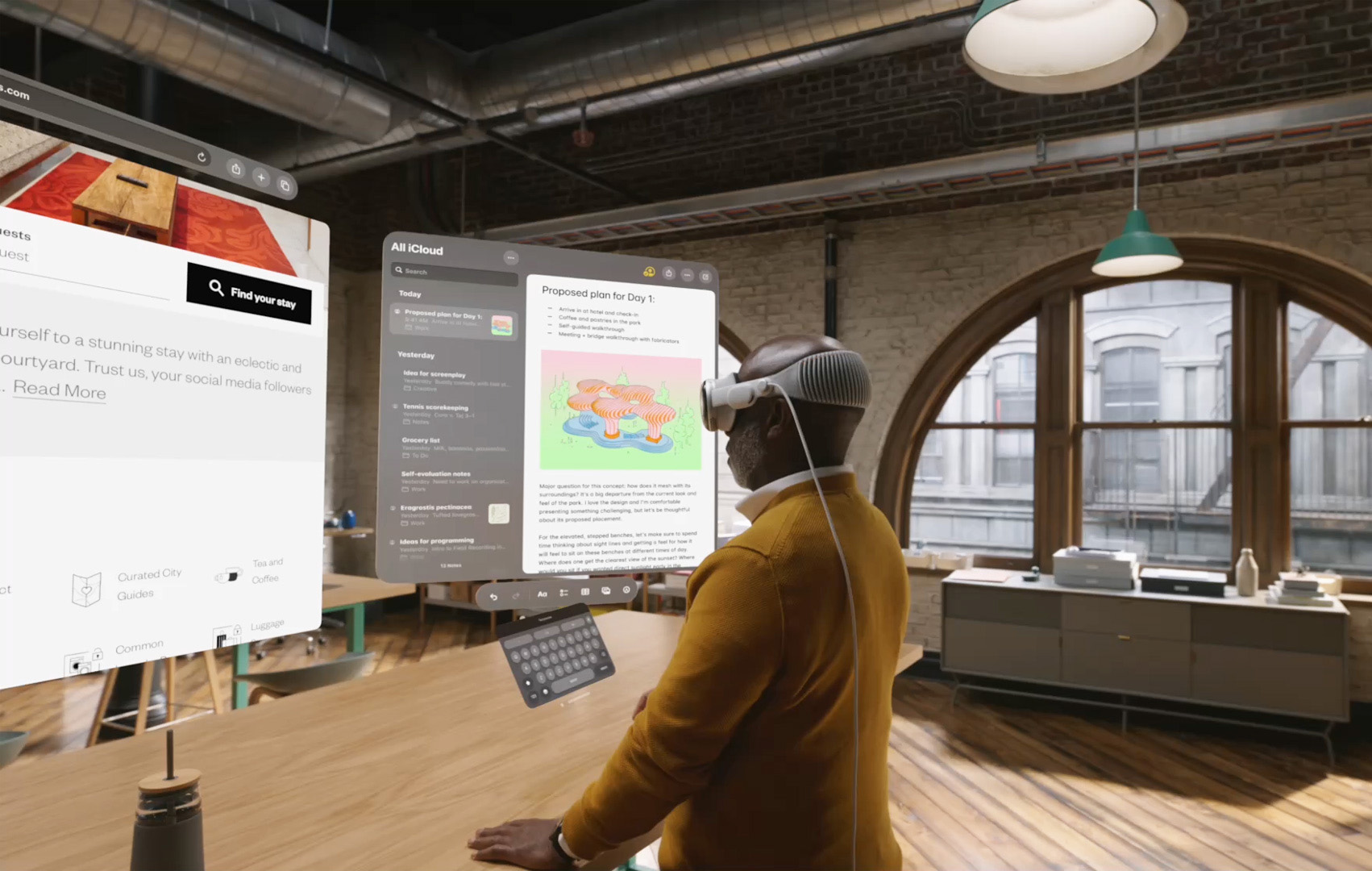

- Windows: Within your VisionOS app, you can craft one or more windows. Constructed using SwiftUI, they comprise conventional views and controls. Depth can be added to your experience through 3D content.

- Volumes: Introduce depth to your application with a 3D volume. Volumes are SwiftUI scenes that display 3D content through RealityKit or Unity, crafting experiences observable from any perspective in the Shared Space or an app’s Full Space.

- Spaces: By default, applications initiate in the Shared Space, residing alongside each other — akin to multiple apps on a Mac desktop. Apps can utilise windows and volumes for content display, and users can relocate these elements at will. For an immersive experience, an app can inaugurate a dedicated Full Space, where solely that app’s content emerges. Within a Full Space, an app can employ windows and volumes, curate boundless 3D content, open a portal to a different realm, or wholly immerse individuals in an environment.

Here, context might denote various concepts; it might signify work, relaxation, or leisure. Every component could be influenced by factors like location, time, or ongoing activity, and these factors could, in turn, be impacted by a combination of elements. Apple integrates a rudimentary contextual feature named ‘Focus’ across MacOS, iOS, and iPadOS. This tool, to a degree, empowers users to delineate time, location, and function, setting their device in line with their chosen settings, thereby assisting the user’s concentration or, in sleep's case, curtailing disruptions.

With VisionOS and Vision Pro, the idea of context can be advanced further. With this in perspective, I've probed the concept of context and its application across different tasks. The inaugural move is to weave 'Context' into Apple’s ecosystem; Windows, Volumes, and Spaces now integrate context.

Context:

Can be woven into Windows, Volumes, and Spaces. It can also tether to an iPhone or iPad, a HomePod or an iBeacon, and even an Apple Air Tag, augmenting Vision Pro and VisionOS's comprehension of the prevailing scenario. Context metamorphoses an immersive experience into one that’s personal, intuitive, and efficient.

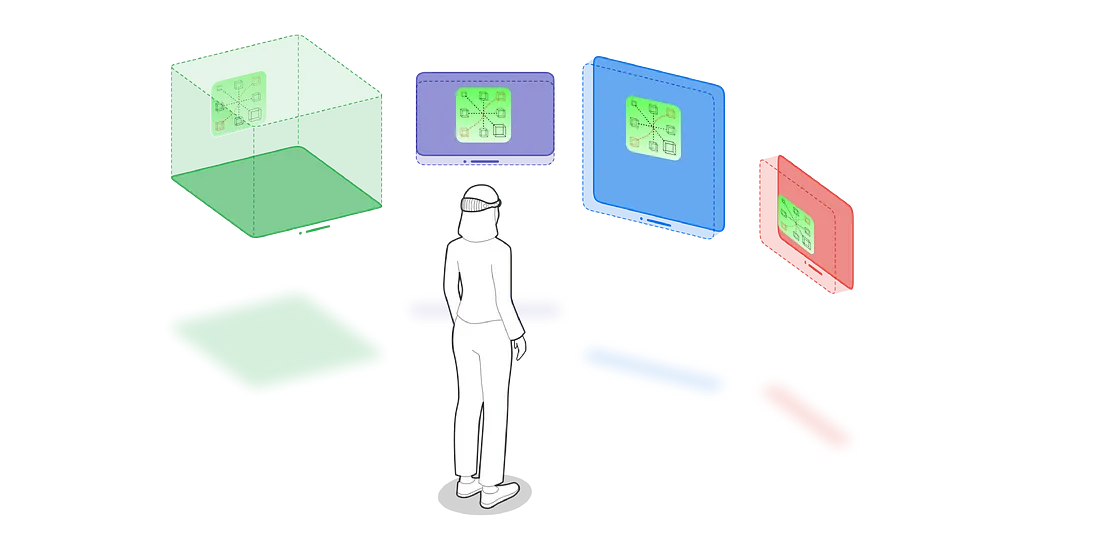

Context mapping necessitates a profound comprehension of user behaviours and task examination for execution. How might context manifest? As a user enters a space — for instance, in the image below, the user transitions into a space equipped with a HomePod or an iBeacon — these devices alert Vision Pro of the user’s present location, proposing apps, content, or awaiting directives based on the likely upcoming event.

The subsequent image amplifies Apple’s ‘Focus’ capability, amalgamating ideas of spatial computing and spaces to confer context. In this instance, the user steps into their home office and the contextual function proposes three pivotal ‘Focus Spaces’ — Work, Research, and Personal. Reflecting past activity within this space, these are the most commonly activated Focus Spaces.

Guided by eye-tracking, the user opts for the 'Work' Focus Space. This selection causes leisure apps like Apple TV and Music to recede, prioritising work-centric applications. The user can now enlarge the ‘Work — Focus Space’, being shown several alternatives, all previously interacted with. Upon choosing a suitable Focus Space, the interface transitions, flooding the user’s perspective with pertinent windows, apps, and related elements. Moreover, apps in recent use remain accessible, sparing the user from swapping views or trawling through menus.

One critique I possess regarding several headsets presently in the market (note my current favourite is the Oculus Pro) is the sluggishness of certain tasks. Input, navigation, and even scrolling can be laborious. A prevailing argument against VR/AR headset usability pivots on their intricate interfaces and cognitive demand, presenting a steep learning gradient for users. Research in the AR arena corroborates this critique, spotlighting issues in user experience design, information presentation, and interaction methodologies — all contributors to user vexation.

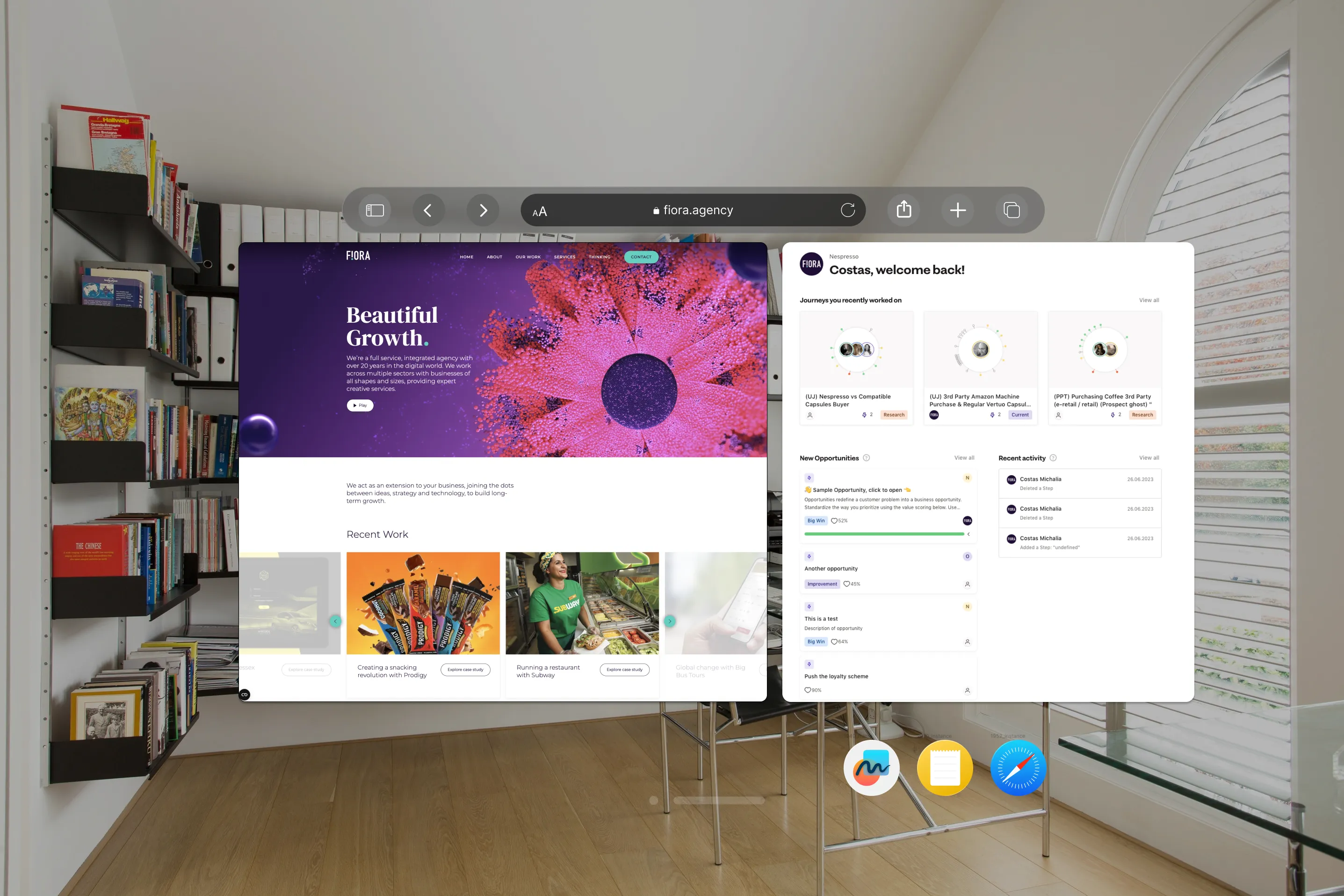

The notion of blending context into Apple’s polished UI has the potential to significantly amplify work efficiency within the headset. A captivating trait of Apple’s Vision Pro is its potential to accommodate a display as vast as one's field of view. Multiple studies have probed the optimal setup, oscillating between various monitors and singular, expansive screens. The move towards spatial computing and boundary elimination ushers in an exciting challenge and advantage.

Multiscreen and large format screens:

The efficacy of utilising expansive or multiple screens can oscillate depending on task nature, user experience, and specific contexts. Numerous studies have explored this domain, and whilst no unanimous 'ideal' setup exists, several salient insights have surfaced.

- Increased Productivity with Multiple Monitors: Research by the University of Utah discerned that productivity might surge by up to 25% with multiple monitors, facilitating efficient multitasking and information retrieval. Nonetheless, the study also emphasised that benefits can hinge on the work's nature. Tasks demanding frequent application switches or side-by-side information comparisons might gain more.

- Potential for Increased Distraction: In contrast, studies imply that more screen estate might induce greater distractions, particularly in a multitasking-heavy milieu. In settings where various windows or apps are active simultaneously, users might struggle to concentrate on singular tasks (Czerwinski, et al., 2004). Yet, this may also be contingent upon individual predilections and their attention management prowess.

- Size Matters: Enlarged monitors can curtail scrolling necessities and amplify text and image visibility. Yet, if excessively large, it may compel users to frequently shift their gaze or head, potentially inducing discomfort or weariness.

- Optimal Arrangement: Some research proposes that a duo-monitor configuration, with a primary display upfront and a secondary to the side, might proffer an optimal balance for most users (Colvin, et al., 2004).

- Single Expansive Display vs. Multiple Compact Displays: Investigations have also delved into whether a singular expansive screen or multiple compact displays fare better. Some deduced that for overview-requisite tasks (e.g., data analysis), one expansive screen might prove superior. Conversely, for tasks demanding application toggling, multiple smaller screens might excel

It's important to acknowledge that the 'ideal' setup might oscillate based on individual proclivities, task nature, and application context.

No borders or seats:

Taking a moment to contemplate the actual usage of the headset, I find it hard to envision myself seated while using the Vision Pro, considering that I seldom, if ever, sit while employing my Oculus Pro. As such, standing might seem the more natural position, thereby rendering the ability to turn, move around, and situate virtual windows in contextual spaces as an intuitively logical progression.

Where I see contextual spatial computing truly starting to excel is within the realm of collaboration. Restricting oneself to a window within Zoom or Teams and having to share screens, taking turns to express opinions or thoughts, is time-consuming, awkward and tiring.

As illustrated in the image above, the team is engaged across several documents and platforms. I envisage a more tangible ‘Horizon Workrooms’ type space. However, within VisionOS, everyone shares the same space, simultaneously working on a single document and or collaborating across multiple documents independently.

Imagine sharing the same space, but within your own environment — think of it as akin to sharing a window rather than your entire screen. You can choose to invite someone into your space and establish a boundary within that area. In essence, you’re sharing the context and Focus Space but not the entire environment.

Possessing the capacity to control one’s own space, retreating to a corner to take a call or send an email whilst remaining present, will be a compelling feature. It’s likely to address some of the pain points many users encountered within Horizon Workrooms.

As the day draws to a close, the user walks through their home, eventually entering their lounge. The headset, recognising that it’s 6:00pm and the end of the workday, presents the user with news updates. The user casually dismisses these, takes a seat, and glances at their record collection. In response, the headset shifts to music mode, foregrounding the three most frequently used apps in this particular Focus Space: Music, Apple TV, and Safari.

This scenario showcases the potential future of spatial computing — a world in which walls, indeed any surface, become screens and facilitate interactions. Current research and predictions suggest that this is more than mere speculation. Developments in technology such as eye tracking, head up display, augmented reality, holography, and advanced projection systems are leading us towards an era of ubiquitous computing, where the environment itself becomes the interface.

This vision aligns closely with the concept of contextual spatial computing, which can be encapsulated as ‘Contextually aware Focus Spaces’ (CaF). By adding layers of contextual information to our spatial interactions, we enrich the user experience and add a level of personalisation and adaptability that isn’t currently possible with standard screens. This concept is at the core of the Vision Pro and VisionOS experience, providing a holistic, immersive, and personalised interaction with digital content.

However, the ultimate goal must be to transition beyond the headset and the operating system, to a point where our environment itself is the computing device. This future is beautifully captured in the concept of Star Trek’s Holodeck — a fully immersive, interactive environment that responds to the user’s needs and actions in real-time. While we are not there yet, advances in spatial computing, holography, and related technologies are taking us ever closer to this vision.